The latency wall impeding successful mainframe migrations

In mainframe migration, there’s a dirty secret that few talk about: the latency wall.

You can preserve all the business logic, understand every semiotic pattern, and translate institutional theories perfectly. But if your migrated system is ten times slower than the original, you’ve failed.

This is a hard challenge and one that semantic understanding alone cannot solve.

The reason is simple: physics.

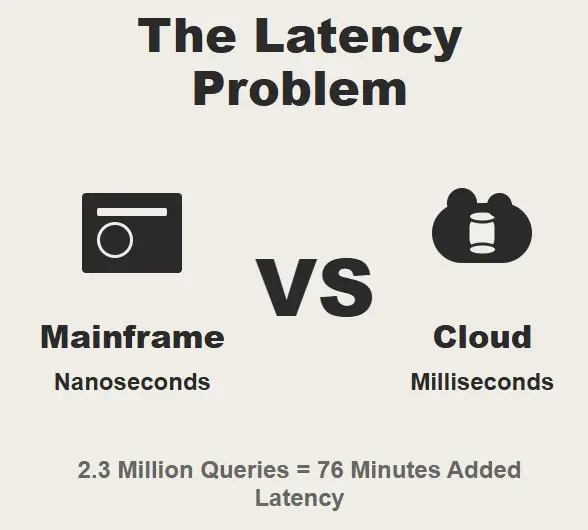

On a mainframe, your application and data live in the same machine. When a COBOL or PL/1 program reads from a VSAM file or queries a DB2 table, that data is local. The access time is measured in nanoseconds. It’s essentially zero latency. Move that same application to the cloud, and everything changes.

Now your Java application runs in one container, your database in another, possibly on a different physical machine. Every database query now travels over the network. That’s milliseconds instead of nanoseconds. For a single query, this doesn’t matter. A few milliseconds is imperceptible to humans. But mainframe batch jobs don’t make single queries. They make millions of queries.

We worked with a company where a single batch job net made 2.3 million database reads during the nightly batch run.

On the mainframe, with zero latency, this took 45 minutes. When we migrated to cloud, preserving all the business logic perfectly, the same job took 8 hours.

The math is brutal. If each query takes 2 milliseconds (you can only dream of 2ms if you are using AWS RDS) instead of 2 nanoseconds, and you make 2.3 million queries, you’ve added 76 minutes of pure network latency. That’s before any other cloud overhead. You can’t solve this with better algorithms or smarter caching.

It’s the speed of light that is the limiting factor. Network packets take time to travel. Most migration approaches pretend this problem doesn’t exist. They focus on functional equivalence and hope the performance issues will resolve themselves. They won’t.

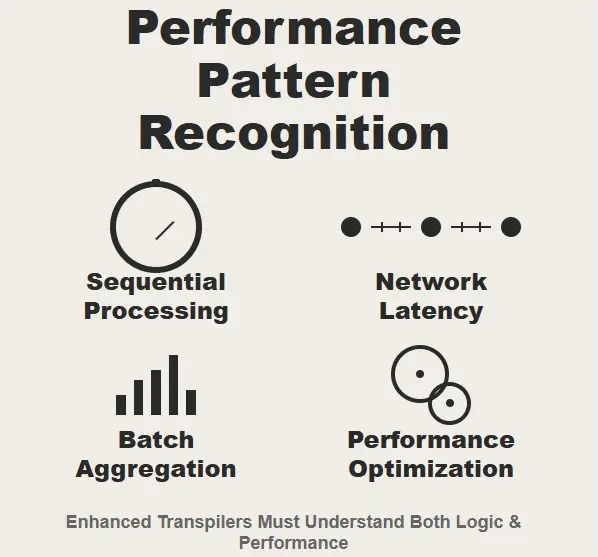

At this point someone, probably from a GSI or CSP will tell you that the application should be rewritten. Completely 100% WRONG. You need to make the migration tooling smarter to understand the original intent and automatically apply performance pattern recognition and alleviation to the migrated code.

Here’s what we’re learning as we build our enhanced transpilers: understanding what the code means is only half the battle. Understanding how it performed is the other half. This is why we’re not just adding semiotic analysis to our transpilers but we’re adding performance pattern recognition.

When our enhanced transpilers encounter a COBOL or PL/1 program that processes large datasets, they don’t just understand the business logic. They understand the performance characteristics. They recognize patterns like sequential processing, batch aggregation, locality, and blocking. These patterns aren’t just business logic, they’re performance theories.

They represent decades of learning about how to process large amounts of data efficiently within mainframe constraints but these performance theories often translate poorly to cloud environments. The patterns that made sense for zero-latency local storage don’t work the same way with network-attached databases.

Organizations that ignore the latency wall discover it the hard way. They end up with functionally correct systems that are operationally unusable. They preserve the business logic but lose the performance characteristics that made the original systems viable.

Our enhanced transpilers are designed to preserve both. They understand that performance is semantics, and that maintaining operational characteristics is just as important as maintaining business logic.

The cloud gives us new capabilities for processing large datasets efficiently. But only if we understand how to use them.

That’s the challenge we’re tackling at Heirloom. It’s not just about translating code. It’s about translating performance. And that’s a problem that requires more than just semantic understanding.

It requires reimagining the very patterns that made the original systems work.