The Chimp Paradox and Legacy Language Translation – Part 2

Archive for August, 2025

-

August 14th, 2025

The Chimp Paradox and Legacy Language Translation – Part 2

How Heirloom Implements the Three-System Solution — Graham Cunningham, CTO

This is for CIOs and CTOs at legacy-heavy institutions, banks, insurers, and public services, who need to modernize without compromising stability or security.

Next Post: Coming soon...

Previous Post: The Chimp Paradox and Legacy Language Translation - Part 1

The Failure of Traditional Modernization Approaches

Most modernization projects fail because they try to do everything at once. Companies attempt massive rewrites, betting their business on experimental approaches that promise to solve decades of technical debt overnight. The result? Code that runs but no one understands, bringing you back to square one, or worse, creating new vulnerabilities in mission-critical systems.

Heirloom Computing takes a staged approach to avoid that trap. We help you build resilient digital systems that last, using a proven two-stage process that delivers immediate value while preparing you for long-term success.

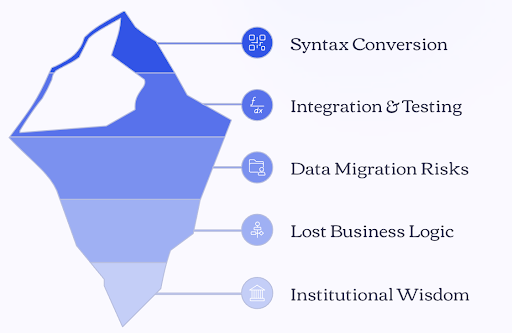

The Challenge: Why Most Legacy Modernization Fails

Consider what typically happens when a European bank decides to modernize its core banking system. The modernization team promises to replace 40 years of COBOL with modern Java in 18 months. Eighteen months later (if they are lucky), they have Java code that compiles and passes basic tests, but when business rules need updating, no one understands how the new system actually works. The original business logic, refined through decades of real-world use, has been lost in translation.

This happens because traditional approaches treat code translation as a purely technical problem. They convert syntax without preserving the business theories that make legacy systems work. The deeper knowledge about why the system was built the way it was, the accumulated wisdom about edge cases, regulatory requirements, and business rules, disappears.

The Heirloom Two-Stage Solution

At Heirloom Computing, we’ve built our entire approach around a key insight: you shouldn’t have to choose between safety and modernization. You need both, but at different times and for different reasons.

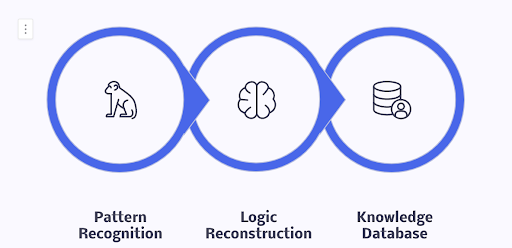

We combine…

– pattern recognition (Chimp)

– human logic reconstruction (Human)

– accumulated best practices (Computer)…into one orchestrated system. Without this orchestration, pattern recognition produces superficial translations, logic reconstruction lacks context, and best practices remain isolated. Together, they preserve both the “what” and the “why” of your legacy systems.

Stage One: Immediate Value Through Proven Replatforming

Uses our battle-tested transpilers to replatform your legacy systems on the JVM. These aren’t experimental tools, they’re proven in production across dozens of enterprise deployments. Your COBOL runs on modern infrastructure immediately. Your PL/I integrates with contemporary tools. You’re off the mainframe, costs drop by 60-80%, and everything works exactly as before.

The specific risk we eliminate: You avoid the catastrophic failure mode where modernization projects consume millions of dollars and years of effort, only to produce systems that work differently from the original in subtle but business-critical ways.

For example, Venerable, a US annuities company used Stage 1 to shutdown its mainframe system in less than a year, reducing mainframe costs entirely and OpEx by 75% while maintaining full regulatory compliance. The system handled the same transaction volumes with identical business logic, but now ran on infrastructure their team could manage and scale with hardware vendor choices.

Stage Two: Long-Term Value Through Intelligent Refactoring

When you’re ready, when you have the time, budget, and strategic need, use our semiotic transpilers to refactor the code into idiomatic Java. This is where theory reconstruction happens. This is where you get code your teams can actually maintain, extend, and integrate with modern AI systems.

Our process reconstructs the business logic behind legacy code patterns, not just the syntax. A claims processing rule that took 200 lines of dense COBOL becomes 50 lines of clear, documented Java that new developers can understand and modify confidently.

The Three-System Architecture in Practice

Our translation process leverages three coordinated systems, each preventing the others’ failure modes:

Pattern Recognition (Chimp): The LLM quickly identifies obvious patterns and provides initial translation scaffolding. Controlled and supervised to prevent shallow conversions.

Logic Reconstruction (Human): The MCP server performs deep analysis, parsing business rules, data relationships, and integration requirements. Takes the time needed for thoughtful analysis.

Knowledge Database (Computer): The vector database stores successful translation patterns from previous projects, ensuring every customer benefits from accumulated institutional knowledge.

Without orchestration, pattern recognition produces code that compiles but doesn’t preserve business meaning. Logic reconstruction without accumulated knowledge repeats solved problems. Knowledge databases without fresh analysis become stale and miss context-specific requirements.

Building Interpretants That Preserve Business Logic

Our MCP server functions as an interpretant-building system, looking beyond code patterns to infer the business logic behind them. It constructs semantic models that capture not just what the code does, but why it does it.

When we encounter a payment validation routine in a German bank’s COBOL system, we don’t just convert the syntax. We identify that it implements specific EU regulatory requirements, handles currency conversion edge cases, and includes fraud detection logic developed over years of production use. The resulting Java preserves all of this institutional knowledge in maintainable, documented form.

Why This Approach Delivers Real Value

The difference between replatforming and refactoring is the difference between immediate relief and long-term capability. Replatforming gets you off expensive mainframes right away, critical for budget planning and infrastructure management. Refactoring gives you code that your teams can actually work with over time, essential for innovation and AI integration.

Most legacy modernization projects fail because they try to do both simultaneously. Heirloom’s staged approach eliminates this risk.

Stage one delivers immediate value with proven technology. Stage two provides long-term capability with advanced methods. You don’t have to choose; you get both on your timeline.Because you’re already running on our proven transpilers, you control the refactoring pace completely. Refactor customer-facing modules first for competitive advantage. Leave stable back-office systems for later. You manage both timeline and risk.

Success Metrics from Real Deployments

A European pensions company used our two-stage approach to modernize its pension calculation system. Stage 1 replatforming took 12 weeks and reduced operating costs by 80%. Over the following 3 months, they refactored the frontend of the system into idiomatic Java, reducing system complexity and enabling integration with modern analytics tools.

The measurable outcomes our customers achieve:

– Immediate cost reduction: 60-80% infrastructure savings within months

– Risk elimination: Zero business logic loss during translation

– Team efficiency: COBOL and PL/1 developers remained useful as SMEs

– Future readiness: Systems prepared for AI integration without security gapsEstablished Foundation for an AI-Ready Future

AI is part of the future, but to benefit from it fully, you need strong foundations. Legacy systems with undocumented business logic and hidden vulnerabilities become AI security risks. Modern systems with clear, maintainable code become AI opportunity platforms.

Heirloom has been solving this challenge since 2010, long before AI hype cycles. We’re not experimental. We’re established, proven, and built for the long term. While others ask you to bet your business on untested approaches, we deliver systems that work today and adapt tomorrow.

Because you’re already running on our proven transpilers, you control the refactoring pace completely. Refactor customer-facing modules first for competitive advantage. Leave stable back-office systems for later. You manage both timeline and risk.

The Heirloom Advantage: Proven Technology, Advanced Capability

While competitors struggle with syntax errors or ask you to risk everything on experimental translation, Heirloom delivers working systems that preserve decades of hard-won business knowledge. We’re not just building translation tools, we’re building understanding machines that help you maintain a competitive advantage while reducing technical risk.

The result? Java code that your teams can maintain, extend, and trust. Not just code that compiles, but code that makes business sense. In a world where digital resilience determines competitive advantage, that’s the foundation every enterprise needs.

Next Post: Coming soon...

Previous Post: The Chimp Paradox and Legacy Language Translation - Part 1

-

August 7th, 2025

Mainframe Migration – The Latency Challenge

The latency wall impeding successful mainframe migrations — Graham Cunningham, CTO

In mainframe migration, there’s a dirty secret that few talk about: the latency wall.

You can preserve all the business logic, understand every semiotic pattern, and translate institutional theories perfectly. But if your migrated system is ten times slower than the original, you’ve failed.

This is a hard challenge and one that semantic understanding alone cannot solve.The reason is simple: physics.

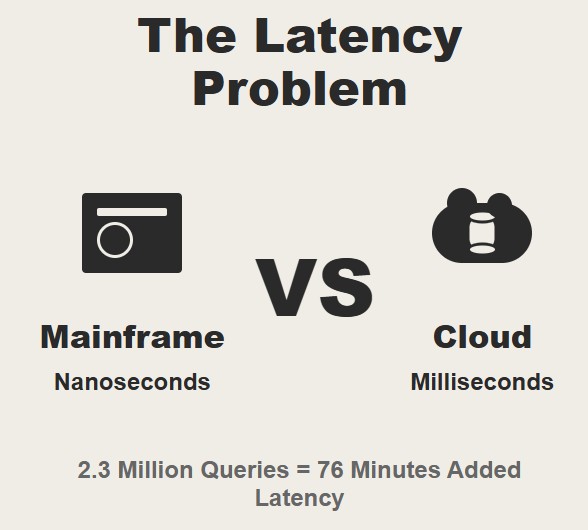

On a mainframe, your application and data live in the same machine. When a COBOL or PL/1 program reads from a VSAM file or queries a DB2 table, that data is local. The access time is measured in nanoseconds. It’s essentially zero latency. Move that same application to the cloud, and everything changes.

Now your Java application runs in one container, your database in another, possibly on a different physical machine. Every database query now travels over the network. That’s milliseconds instead of nanoseconds. For a single query, this doesn’t matter. A few milliseconds is imperceptible to humans. But mainframe batch jobs don’t make single queries. They make millions of queries.

We worked with a company where a single batch job net made 2.3 million database reads during the nightly batch run.

On the mainframe, with zero latency, this took 45 minutes. When we migrated to cloud, preserving all the business logic perfectly, the same job took 8 hours.

The math is brutal. If each query takes 2 milliseconds (you can only dream of 2ms if you are using AWS RDS) instead of 2 nanoseconds, and you make 2.3 million queries, you’ve added 76 minutes of pure network latency. That’s before any other cloud overhead. You can’t solve this with better algorithms or smarter caching.It’s the speed of light that is the limiting factor. Network packets take time to travel. Most migration approaches pretend this problem doesn’t exist. They focus on functional equivalence and hope the performance issues will resolve themselves. They won’t.

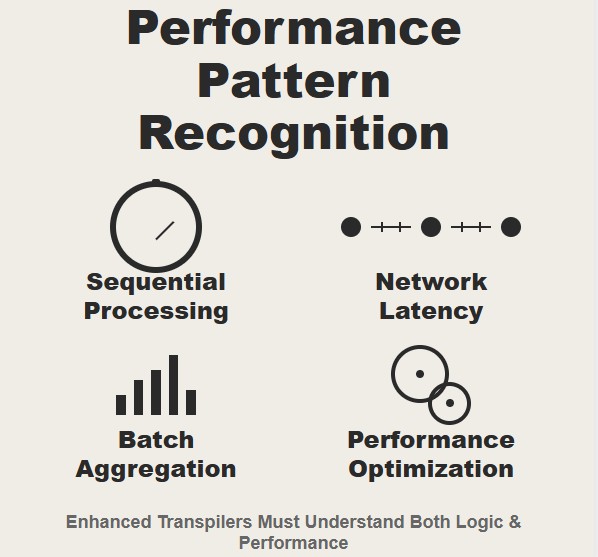

At this point someone, probably from a GSI or CSP will tell you that the application should be rewritten. Completely 100% WRONG. You need to make the migration tooling smarter to understand the original intent and automatically apply performance pattern recognition and alleviation to the migrated code.

Here’s what we’re learning as we build our enhanced transpilers: understanding what the code means is only half the battle. Understanding how it performed is the other half. This is why we’re not just adding semiotic analysis to our transpilers but we’re adding performance pattern recognition.

When our enhanced transpilers encounter a COBOL or PL/1 program that processes large datasets, they don’t just understand the business logic. They understand the performance characteristics. They recognize patterns like sequential processing, batch aggregation, locality, and blocking. These patterns aren’t just business logic, they’re performance theories.

They represent decades of learning about how to process large amounts of data efficiently within mainframe constraints but these performance theories often translate poorly to cloud environments. The patterns that made sense for zero-latency local storage don’t work the same way with network-attached databases.Organizations that ignore the latency wall discover it the hard way. They end up with functionally correct systems that are operationally unusable. They preserve the business logic but lose the performance characteristics that made the original systems viable.

Our enhanced transpilers are designed to preserve both. They understand that performance is semantics, and that maintaining operational characteristics is just as important as maintaining business logic.

The cloud gives us new capabilities for processing large datasets efficiently. But only if we understand how to use them.

That’s the challenge we’re tackling at Heirloom. It’s not just about translating code. It’s about translating performance. And that’s a problem that requires more than just semantic understanding.

It requires reimagining the very patterns that made the original systems work.